Users of Linux, Mac OSX, or other Unix-like environments are able to install Hadoop and run it on one (or more) machines with no additional software beyond Java. If you are interested in doing this, there are instructions available on the Hadoop web site in the quickstart document.

Running Hadoop on top of Windows requires installing cygwin, a Linux-like environment that runs within Windows. Hadoop works reasonably well on cygwin, but it is officially for "development purposes only." Hadoop on cygwin may be unstable, and installing cygwin itself can be cumbersome.

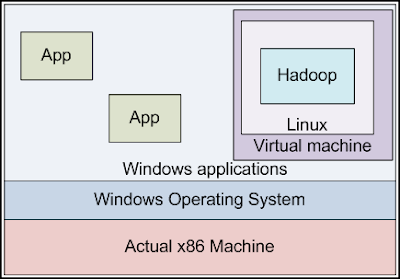

To aid developers in getting started easily with Hadoop, we have provided a virtual machine image containing a preconfigured Hadoop installation. The virtual machine image will run inside of a "sandbox" environment in which we can run another operating system. The OS inside the sandbox does not know that there is another operating environment outside of it; it acts as though it is on its own computer. This sandbox environment is referred to as the "guest machine" running a "guest operating system." The actual physical machine running the VM software is referred to as the "host machine" and it runs the "host operating system." The virtual machine provides other host-machine applications with the appearance that another physical computer is available on the same network. Applications running on the host machine see the VM as a separate machine with its own IP address, and can interact with the programs inside the VM in this fashion.

Application developers do not need to use the virtual machine to run Hadoop. Developers on Linux typically use Hadoop in their native development environment, and Windows users often install cygwin for Hadoop development. The virtual machine provided with this tutorial allows users a convenient alternative development platform with a minimum of configuration required. Another advantage of the virtual machine is its easy reset functionality. If your experiments break the Hadoop configuration or render the operating system unusable, you can always simply copy the virtual machine image from the CD back to where you installed it on your computer, and start from a known-good state.

Our virtual machine will run Linux, and comes preconfigured to run Hadoop in pseudo-distributed mode on this system. (It is configured like a fully distributed system, but is actually running on a single machine instance.) We can write Hadoop programs using editors and other applications of the host platform, and run them on our "cluster" consisting of just the virtual machine. We will connect our host environment to the virtual machine through the network.

It should be noted that the virtual machine will also run inside of another instance of Linux. Linux users can install the virtual machine software and run the Hadoop VM as well; the same separation between host processes and guest processes applies here.

Installing VMware Player

The virtual machine is designed to run inside of the VMware Player. A copy of the VMware player installer (version 2.5) for both 32-bit Windows and Linux is included here (linux-rpm, linux-bundle, windows-exe). A Getting Started guide for VMware player provides instructions for installing the VMware player. Review the license information for VMware player before using it..

If you are running on a different operating system, or would prefer to download a more recent version of the player, an alternate installation strategy is to navigate to http://info.vmware.com/content/GLP_VMwarePlayer. You will need to register for a "virtualization starter kit." You will receive an email with a link to "Download VMware Player." Click the link, then click the "download now" button at the top of the screen under "most recent version" and follow the instructions. VMware Player is available for Windows or Linux. The latter is available in both 32- and 64-bit versions.

VMware Player itself is approximately a 170 MB download. When the download has completed, run the installer program to set up VMware Player, and follow the prompts as directed. Installation in Windows is performed by a typical Windows installation process.

Setting up the Virtual Environment

Next, copy the Hadoop Virtual Machine into a location on your hard drive. It is a zipped vmware folder (hadoop-vm-appliance-0-18-0) which includes a few files; a .vmdk file that is a snapshot of the virtual machine's hard drive, and a .vmx file which contains the configuration information to start the virtual machine. After unzipping the vmware folder zip file, to start the virtual machine, double-click on the hadoop-appliance-0.18.0.vmx file in Windows Explorer.

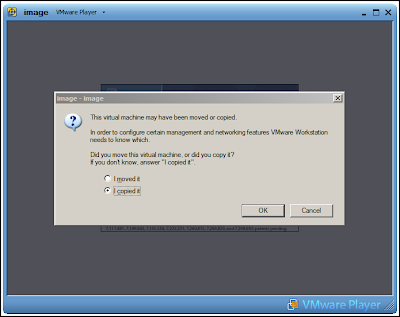

When you start the virtual machine for the first time, VMware Player will recognize that the virtual machine image is not in the same location it used to be. You should inform VMware Player that you copied this virtual machine image. VMware Player will then generate new session identifiers for this instance of the virtual machine. If you later move the VM image to a different location on your own hard drive, you should tell VMware Player that you have moved the image.

If you ever corrupt the VM image (e.g., by inadvertently deleting or overwriting important files), you can always restore a pristine copy of the virtual machine by copying a fresh VM image off of this tutorial CD. (So don't be shy about exploring! You can always reset it to a functioning state.)

After you select this option and click OK, the virtual machine should begin booting normally. You will see it perform the standard boot procedure for a Linux system. It will bind itself to an IP address on an unused network segment, and then display a prompt allowing a user to log in.

Virtual Machine User Accounts

The virtual machine comes preconfigured with two user accounts: "root" and "hadoop-user". The hadoop-user account has sudo permissions to perform system management functions, such as shutting down the virtual machine. The vast majority of your interaction with the virtual machine will be as hadoop-user.

To log in as hadoop-user, first click inside the virtual machine's display. The virtual machine will take control of your keyboard and mouse. To escape back into Windows at any time, press CTRL+ALT at the same time. The hadoop-user user's password is hadoop. To log in as root, the password is root.

Running a Hadoop Job

Now that the VM is started, or you have installed Hadoop on your own system in pseudo-distributed mode, let us make sure that Hadoop is properly configured.

If you are using the VM, log in as hadoop-user, as directed above. You will start in your home directory: /home/hadoop-user. Typing ls, you will see a directory named hadoop/, as well as a set of scripts to manage the server. The virtual machine's hostname is hadoop-desk.

First, we must start the Hadoop system. Type the following command:

hadoop-user@hadoop-desk:~$ ./start-hadoop

If you installed Hadoop on your host system, use the following commands to launch hadoop (assuming you installed to ~/hadoop):

you@your-machine:~$ cd hadoop

you@your-machine:~/hadoop$ bin/start-all.sh

You will see a set of status messages appear as the services boot. If prompted whether it is okay to connect to the current host, type "yes". Try running an example program to ensure that Hadoop is correctly configured:

hadoop-user@hadoop-desk:~$ cd hadoop

hadoop-user@hadoop-desk:~/hadoop$ bin/hadoop jar hadoop-0.18.0-examples.jar pi 10 1000000

This should provide output that looks something like this:

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

...

Wrote input for Map #10

Starting Job

INFO mapred.FileInputFormat: Total input paths to process: 10

INFO mapred.JobClient: Running job: job_200806230804_0001

INFO mapred.JobClient: map 0% reduce 0%

INFO mapred.JobClient: map 10% reduce 0%

...

INFO mapred.JobClient: map 100% reduce 100%

INFO mapred.JobClient: Job complete: job_200806230804_0001

...

Job Finished in 25.841 second

Estimated value of PI is 3.141688

This task runs a simulation to estimate the value of pi based on sampling. The test first wrote out a number of points to a list of files, one per map task. It then calculated an estimate of pi based on these points, in the MapReduce task itself. How MapReduce works and how to write such a program are discussed in the next module. The Hadoop client program you used to launch the pi test launched the job, displayed some progress update information as to how the job is proceeding, and then displayed some final performance counters and the job-specific output: an estimate for the value of pi.

Accessing the VM via ssh

Rather than directly use the terminal of the virtual machine, you can also log in "remotely" over ssh from the host environment. Using an ssh client like putty (in Windows), log in with username "hadoop-user" (password hadoop) to the IP address displayed in the virtual machine terminal when it starts up. You can now interact with this virtual machine as if it were another Linux machine on the network.

This can only be done from the host machine. The VMware image is, by default, configured to use host-only networking; only the host machine can talk to the virtual machine over its network interface. The virtual machine does not appear on the actual external network. This is done for security purposes.

If you need to find the virtual machine's IP address later, the ifconfig command will display this under the "inet addr" field.

Important security note: In the VMware settings, you can reconfigure the virtual machine for networked access rather than host-only networking. If you enable network access, you can access the virtual machine from anywhere else on the network via its IP address. In this case, you should change the passwords associated with the accounts on the virtual machine to prevent unauthorized users from logging in with the default password.

Shutting Down the VM

When you are done with the virtual machine, you can turn it off by logging in as hadoop-user and typing sudo poweroff. The virtual machine will shut itself down in an orderly fashion and the window it runs in will disappear.

I have configured in your way and its working. But when I am running code , its completing map 100 but reduce 0%/. Please I am facing this issue since long time please help me

ReplyDeleteHadoop training in adyar

Hadoop training institute in adyar

There are lots of information about hadoop have spread around the web, but this is a unique one according to me. The strategy you have updated here will make me to get to the next level in big data. Thanks for sharing this.

ReplyDeleteHadoop training velachery

Hadoop training institute in t nagar

Hadoop course in t nagar